Hardware decisions for running local AI workloads have become a battleground of price, compatibility, and software support. The AMD Pro W7800 has surfaced as a candidate for those wanting to sidestep NVIDIA’s pricing and proprietary lock-in, especially for home lab setups focused on inference of large language models like Llama. The W7800 offers a substantial 96GB of VRAM across two cards, with ROCm (AMD’s open compute stack) promising partitioning support. However, a search for real-world benchmarks on LLM inference or Stable Diffusion yields little more than productivity (e.g., Blender) benchmarks (more: url). This lack of visibility is a recurring issue outside the NVIDIA ecosystem, where CUDA dominance means open-source projects and frameworks often lag in supporting alternatives. For experimenters, this means rolling the dice: hardware might be technically capable, but the software stack—drivers, libraries, and model support—remains a moving target.

On the other end of the spectrum, some users are repurposing aging NVIDIA hardware in bulk. One enthusiast squeezed sixteen Tesla P100s into a single server—an impressive feat of hardware recycling. While not delivering bleeding-edge throughput or PCIe bandwidth, this setup is still more affordable than a single flagship RTX 5090. The goal: run models with very large context windows, like Qwen3-235B, though practical issues arise. Performance with llama.cpp is poor, and attempts to leverage parallelism with vLLM have hit roadblocks (more: url). The bottleneck isn’t just raw GPU power but also the host CPU and the limitations of the PCIe lanes. It’s a reminder that scaling up with legacy hardware can be clever, but software compatibility and bandwidth constraints may quickly become the weakest links.

For those shopping for ML laptops, the confusion around VRAM requirements remains high. Many assume that RAM and VRAM can be pooled, but for LLMs like Qwen 2.5 (multimodal) with 7-8B parameters, 24GB or more of dedicated GPU memory is needed. Apple’s shared memory architecture can provide this, but on the NVIDIA side, only high-end RTX cards (e.g., 4090) approach these specs. Mainstream options often top out at 8 or 16GB, making Apple Silicon laptops a rare but valid choice for those unwilling to invest in desktop-class GPUs (more: url). In short, hardware for local AI remains a patchwork of trade-offs between price, compatibility, and support.

The quest for frictionless, AI-native productivity tools is active but fragmented. Users are searching for open-source document editors that blend the familiar experience of Word or iA Writer with conversational AI features: tab completion, section rewriting, idea branching, and in-context suggestions. Despite the demand, no standout native (non-web) open-source project has emerged to rival commercial tools or browser-based solutions (more: url). This gap is striking, considering the rapid evolution of LLM APIs and plugins. The technical challenge is not just UI design but seamless integration with local or cloud-based models, low-latency inference, and privacy-preserving workflows.

In the terminal, however, new interfaces are gaining traction. Yappus, a terminal-native LLM interface written in Rust, promises fast, local-first AI assistance without the overhead of HTTP servers or GUIs. Designed as a scriptable CLI tool, it integrates directly with the filesystem and shell, aiming to streamline tasks like error explanation, command lookup, and even “bash scripting and googling”—essentially, a smarter, faster alternative to tldr or manual pages. The project is still early but stable enough for daily use, and plans for Ollama integration are underway (more: url). This reflects a trend toward embedding LLMs into core developer workflows, prioritizing speed and local control over cloud dependence or heavyweight interfaces.

On the database side, connecting open-source web UIs (like OpenWebUI) to shared MySQL backends remains a pain point. Syntax for DATABASE_URL strings can be surprisingly brittle, with MySQL connection formats differing subtly from PostgreSQL. Even with a working database and command-line access, configuring the correct connection string in application code may trigger obscure formatting errors (more: url). This highlights a recurring reality: even as AI models advance, glue code and configuration details can still derail otherwise simple deployments.

The AI agent ecosystem is rapidly moving toward interoperability, with open protocols and standards aiming to break down silos between disparate agentic applications. Google’s Agent2Agent (A2A) protocol is a prime example: it enables “opaque” agents—those built on different frameworks, running on separate servers, and guarding their internal logic—to discover each other, negotiate interaction modalities, and collaborate securely (more: url). A2A relies on JSON-RPC 2.0 over HTTP(S), with “Agent Cards” describing capabilities, and supports text, files, and streaming data. The design emphasizes not just interoperability but also privacy and IP protection, allowing agents to work together without exposing proprietary internals.

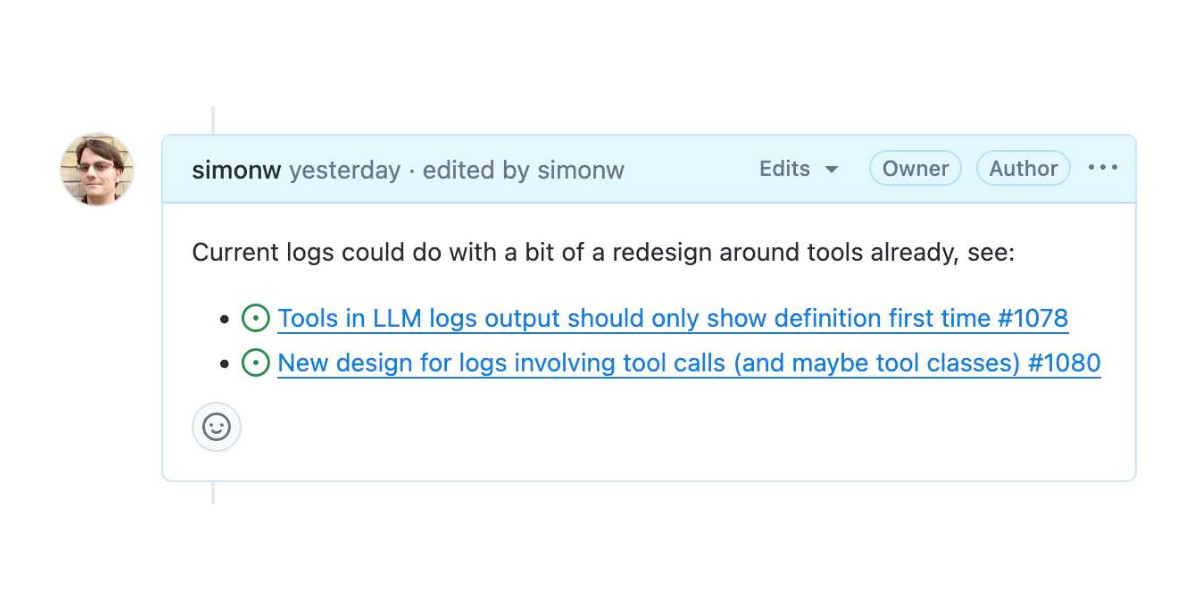

This protocol-driven approach is timely, as the current generation of LLM tools (especially those using Model Context Protocol, or MCP) face real-world scaling issues. A common workflow involves the LLM receiving the full output of a tool call—often a massive JSON blob—then being tasked to interpret and act on it. This quickly becomes inefficient: for example, requesting a list of issues from an MCP server may yield 70,000 characters of JSON, which must be piped back into the model. LLMs then need to parse, sort, and reproduce this data as output tokens—a slow and costly process, especially as context windows fill up with redundant or low-value information (more: url). The lesson: code orchestration, where the LLM generates and coordinates snippets of code to process structured outputs, is simpler and more efficient than funneling everything through the LLM context window.

OpenWebUI’s integration with MCP tools further illustrates both the promise and the friction. Tools are registered with OpenAPI-style /openapi.json descriptors, which the LLM can use to choose which tool to invoke. However, when starting new conversations or repeating queries, the LLM may not always use the appropriate tool, sometimes defaulting to “I don’t know,” especially if the context or tool selection logic isn’t robust (more: url). This underlines the challenge: tool integration standards are promising, but context management and tool selection still require refinement.

With Bing search being decommissioned, web search as an LLM tool is also in flux. Google Gemini offers web grounding but is slow and less tool-like; Google’s move, along with Azure and GCP’s shift toward agent-based paradigms, signals that simple, fast search APIs are becoming harder to find. This has wide implications for developers relying on real-time web search as a function call within agentic workflows (more: url).

On the infrastructure front, open-source platforms continue to mature, aiming to simplify and scale AI/ML workflows. Kubeflow remains a leading ecosystem for orchestrating machine learning pipelines on Kubernetes. By bundling best-in-class open-source components for every stage of the AI/ML lifecycle, Kubeflow enables teams to build, deploy, and manage complex workflows with modularity and portability (more: url). Its community-driven governance and extensive documentation make it a staple for organizations seeking to avoid vendor lock-in while maintaining production-grade scalability.

Databases, too, are being fine-tuned for AI-era demands. Recent projects have delivered significant MySQL 8.0 optimizations, targeting InnoDB scalability, redo log handling, bulk insert performance, memory usage, and high availability. These patches, rigorously tested on high-performance hardware, promise more stable and efficient service than official releases, with rapid bug fixes and strong consistency reads that add only milliseconds of latency (more: url). Such improvements are critical as AI applications increasingly stress database backends with high-throughput, high-concurrency workloads.

The release of large, open datasets is fueling new frontiers in language and speech research. The “100,000 Podcasts” corpus offers a massive, diverse collection of spoken English, transcribed via automatic speech recognition. With its scale and genre diversity, the dataset supports research in NLP, information retrieval, summarization, and acoustic modeling—far surpassing previous corpora in size and complexity (more: url). Its existence underscores the trend: as models grow in capability, the bottleneck increasingly shifts to data quality and diversity.

In the realm of models, specialized and generalist LLMs are rapidly advancing. II-Medical-8B, trained on Qwen3-8B and further optimized with domain-specific datasets and reinforcement learning, achieves a 40% score on HealthBench—a performance on par with OpenAI’s GPT-4.5—across medical QA benchmarks like MedMCQA, MedQA, and PubMedQA (more: url). This highlights the impact of targeted training and evaluation in high-stakes domains like healthcare.

Meanwhile, NVIDIA’s Nemotron-Research-Reasoning-Qwen-1.5B is setting new standards for small-scale generalist reasoning. Trained with the ProRL (Prolonged Reinforcement Learning) algorithm and a diverse set of math, coding, and logic tasks, this 1.5B parameter model outperforms Deepseek’s 1.5B and even rivals some 7B models in complex reasoning tasks (more: url). ProRL’s innovations—such as dynamic sampling, KL regularization, and entropy management—enable deeper exploration and generalization, crucial for robust instruction following and logic.

Diffusion language models are also in the spotlight, with Google’s Gemini Diffusion impressing observers by generating text sequences far faster than traditional autoregressive transformers. Unlike token-by-token generation, diffusion models refine the entire output in parallel at each step, allowing for both speed and flexibility in output quality (users can trade off speed for accuracy by adjusting the number of passes). However, diffusion models tend to produce fixed-length outputs and can struggle with variable-length, open-ended tasks—a limitation that keeps autoregressive models relevant for now (more: url).

Security research continues to probe the boundaries of protocol camouflage and detection. Aparecium, a proof-of-concept tool, detects TLS camouflage protocols like ShadowTLS v3 and REALITY by exploiting their imperfect handling of TLS 1.3 post-handshake messages. By analyzing how these protocols relay or pad handshake data, Aparecium can distinguish them from genuine TLS traffic—demonstrating that even sophisticated traffic obfuscation techniques may have subtle, detectable flaws (more: url).

Digital geometry theory is quietly underpinning advances in graphics and CAD. New work formalizes how “gaps” in 3D digital curves—portions a discrete ray can traverse without intersecting a voxel—can be precisely characterized by linear combinations of basic geometric elements. This has direct implications for ray tracing and combinatorial image analysis, fields where efficient gap detection can accelerate rendering and analysis (more: url).

Finally, in the world of productivity hacks, GitHub Issues is gaining a following as a de facto notebook. With unlimited notes, Markdown support, checklist syntax, powerful inter-linking, and automation via GitHub Actions, Issues offers a flexible, searchable, and free platform for both public and private note-taking. The main drawback is the lack of offline sync, but for many, the convenience and integration outweigh this limitation. Notably, concerns about privacy and data mining are minimal, as GitHub’s business model and reputation for security inspire more confidence than many commercial note apps (more: url).

With the explosion of open-source models, users are increasingly interested in mapping specific use-cases to the most effective models. Questions abound: Which open LLM excels at sentiment analysis? Which is best for emotion detection, innovation, or anomaly detection? While some community efforts are underway to benchmark and compare models on behavior-based tasks, the landscape remains fragmented. The need is clear: curated guidance and standardized evaluations for matching open models to targeted applications, moving beyond generic “chatbot” metrics to real-world utility (more: url).