The debate over local versus cloud AI continues to intensify, as more developers weigh privacy, cost, and performance. One user, aiming for an off-grid, energy-efficient setup, broke down the economics of running a high-performance AI workstation locally. Factoring in the upfront $1500 for a system with an AMD EPYC CPU and RTX 5060 Ti GPU, and an annual electricity bill of roughly $175 for 8 hours of daily use, the total first-year cost is clear and predictable. In contrast, renting comparable hardware from cloud providers like Vast comes in at about $0.11 per hour, leading to a similar yearly outlay, but without hardware ownership or the ability to optimize for ultra-low power (more: url).

For privacy-focused users, the conversation is shifting rapidly toward local-first solutions. Cobolt exemplifies this trend—a cross-platform, open-source AI assistant running entirely on-device, with no data sent to the cloud, and extensibility via the Model Context Protocol (MCP). Cobolt leverages Ollama under the hood for smooth local LLM interaction, aiming to put users in full control of their data and tools. The push for local AI is no longer just about privacy; it’s about flexibility, extensibility, and community-driven innovation (more: url).

Technical enthusiasts are also experimenting with turning gaming-class PCs into headless AI workstations. One user with an RTX 3090 (24GB VRAM) discovered that memory requirements for running models like Qwen3-32B-GGUF are not simply a matter of file size or parameter count. System RAM usage spikes when VRAM overflows, causing severe slowdowns. Estimating LLM memory needs therefore demands a nuanced understanding of quantization, architecture, and runtime overheads—making the quest for the optimal local setup an ongoing challenge (more: url).

As AI agents become more capable, the Model Context Protocol (MCP) is gaining traction as a standard for tool integration. MCP transforms the classic M×N integration problem—where every AI client must connect individually to every tool—into a more manageable M+N scenario. MCP servers expose tools, prompts, and resources through a unified interface, while MCP clients (inside AI applications) connect to any server, decoupling LLMs from the specifics of each data source or action (more: url).

However, this power comes with new security risks. Invariant Labs uncovered a critical vulnerability in GitHub’s MCP integration, where a malicious GitHub Issue could prompt-inject an agent into leaking private repository data. In this “toxic agent flow,” an attacker crafts an issue in a public repo, which the agent then reads and executes as a prompt, ultimately causing it to access and expose sensitive data from private repos via an autonomous pull request. This attack highlights the growing importance of prompt injection defense and access control as AI agents and IDEs become more tightly woven into the software development lifecycle (more: url).

On the innovation front, developers are pushing MCP’s capabilities further. One project equips the tiny Qwen3 0.6B model—running locally in-browser—with MCP support, enabling it to chain tool calls and operate with adjustable reasoning depth. This showcases how even small models, when given the right “toolbelt” via MCP, can perform surprisingly complex tasks, especially when paired with remote tool servers and thoughtful prompt engineering (more: url).

The protocol’s flexibility is also apparent in new self-hosted alternatives to OpenAI’s Code Interpreter. Projects like microsandbox, written in Rust, allow users to securely run AI-generated code in lightweight micro-VMs, connectable via MCP. This provides a path toward both safe code execution and seamless AI-tool interoperability, with Python, TypeScript, and Rust SDKs available for rapid integration (more: url).

As open-source LLMs mature, the ecosystem around local model serving and tool integration is evolving rapidly. Ollama and OpenWebUI are a popular pairing, allowing users to serve models locally with a web-based interface. However, integrating persistent tools—such as API connectors or file systems—remains non-trivial. Users are seeking ways to “bind” tools to models so that they’re always available in a session, akin to the persistent tool access seen in cloud-based solutions. While MCP offers a standards-based path, practical, user-friendly implementations are still catching up to demand (more: url).

Containerization and orchestration introduce their own challenges. Running vLLM (a popular fast inference engine for LLMs) in Kubernetes exposes bottlenecks in startup time—primarily due to large Docker images and slow model weight downloads. Prefetching weights during image build speeds things up, but at the cost of ballooning image sizes (up to 26GB locally, 15.6GB gzipped). Even with these optimizations, cold starts can take over 100 seconds, while restarts (with the model already in memory) drop to under a minute. Further speedups may require custom Docker images stripped of unneeded dependencies, or novel techniques like baking CUDA graphs into the image, but the tradeoff between flexibility and performance remains an active area of experimentation (more: url).

For those seeking to push the limits of local inference, memory efficiency is a recurring theme. The new KVSplit project allows users on Apple Silicon to run larger LLMs with longer context windows by quantizing the key and value components of the attention cache differently. This can reduce memory usage by up to 72% with minimal quality loss, enabling 2–3× longer contexts and potentially faster inference—an important advance for anyone running LLMs on resource-constrained hardware (more: url).

Open-source LLMs are demonstrating real progress in specialized reasoning and structured output. Osmosis-Structure-0.6B, a compact 0.6B parameter model built atop Qwen3-0.6B, is specifically tuned for structured output generation. By training the model to focus on value extraction for declared keys, Osmosis achieves dramatic performance gains on mathematical reasoning benchmarks—up to 13× improvement in some GPT-4.1 and Claude variants when using “osmosis-enhanced” structured outputs. The key insight is that allowing models to “think freely” during inference, while enforcing structure after the fact, yields both accuracy and well-formed outputs—demonstrating that even small models can deliver robust structured reasoning when paired with the right inference engine (more: url).

In the multimodal domain, the MMaDA project introduces a unified diffusion-based foundation model, capable of both text and image generation, as well as reasoning across modalities. MMaDA’s architecture discards modality-specific components in favor of a unified probabilistic design, and introduces a mixed chain-of-thought (CoT) fine-tuning strategy that curates reasoning patterns across modalities. The project also pioneers a new RL algorithm, UniGRPO, for unified post-training. Early results suggest that this approach yields consistent improvements in both reasoning and generative tasks, signaling a potential paradigm shift in how AI models handle complex, cross-domain tasks (more: url).

For enterprise use cases, open models are rapidly closing the gap with proprietary offerings. A consultant building a customer-facing support agent—capable of interacting with 30+ APIs and handling multi-step workflows—found that Llama 3.3:70B delivered the best results among open models (outperforming Gemma and Qwen3). While the desire to avoid proprietary models for data privacy remains strong, the current generation of open LLMs is proving viable for complex, large-scale deployments—though continued improvements in tool use and workflow orchestration are needed (more: url).

The march toward specialized foundation models continues with time-series forecasting and multimodal search. Datadog’s Toto-Open-Base-1.0 is a transformer-based model trained on over 2 trillion time series data points—by far the largest dataset for any open-weights time-series model. Toto’s architecture is optimized for high-dimensional, sparse, and non-stationary data typical of observability metrics. Key features include zero-shot forecasting, support for variable prediction horizons, and probabilistic outputs using a Student-T mixture model. Toto’s strong performance on GIFT-Eval and BOOM benchmarks demonstrates that open models can now challenge proprietary solutions in the critical domain of observability and anomaly detection (more: url).

On the multimodal front, open-source projects are leveraging CLIP (Contrastive Language-Image Pretraining) to deliver local, natural language image search. By embedding both images and queries into a shared vector space, users can search personal image collections using plain English, all running locally for privacy and speed. Open-sourcing the full stack—including a simple UI—lowers the barrier for hobbyists and researchers to experiment with multimodal retrieval on their own hardware (more: url).

The rise of agentic coding assistants brings both promise and peril. A review of the new OpenAI Codex raises questions about code quality and practical utility—users are keen to know whether it can reliably build out projects and how it compares to tools like Cursor, though direct, evidence-backed assessments are still emerging (more: url).

Real-world experience with agents like Claude highlights both their power and their quirks. Claude is capable of automating complex workflows, such as orchestrating AWS Transcribe jobs, but tends to over-engineer solutions and can run into edge-case failures. The key to productive automation is enforcing idempotency and recovery—making sure each step can be retried without repeating completed work. This principle, familiar to experienced engineers, is critical for building robust agentic workflows that survive errors and interruptions (more: url).

Meanwhile, the security posture of AI agents is under increased scrutiny. As demonstrated in the MCP/GitHub exploit, agents empowered to take real actions can be manipulated if prompt injection or cross-context data flows are not tightly controlled. As AI coding agents and IDE plugins become ubiquitous, the industry must invest in both technical and procedural defenses to avoid becoming conduits for “toxic flows” and data leaks.

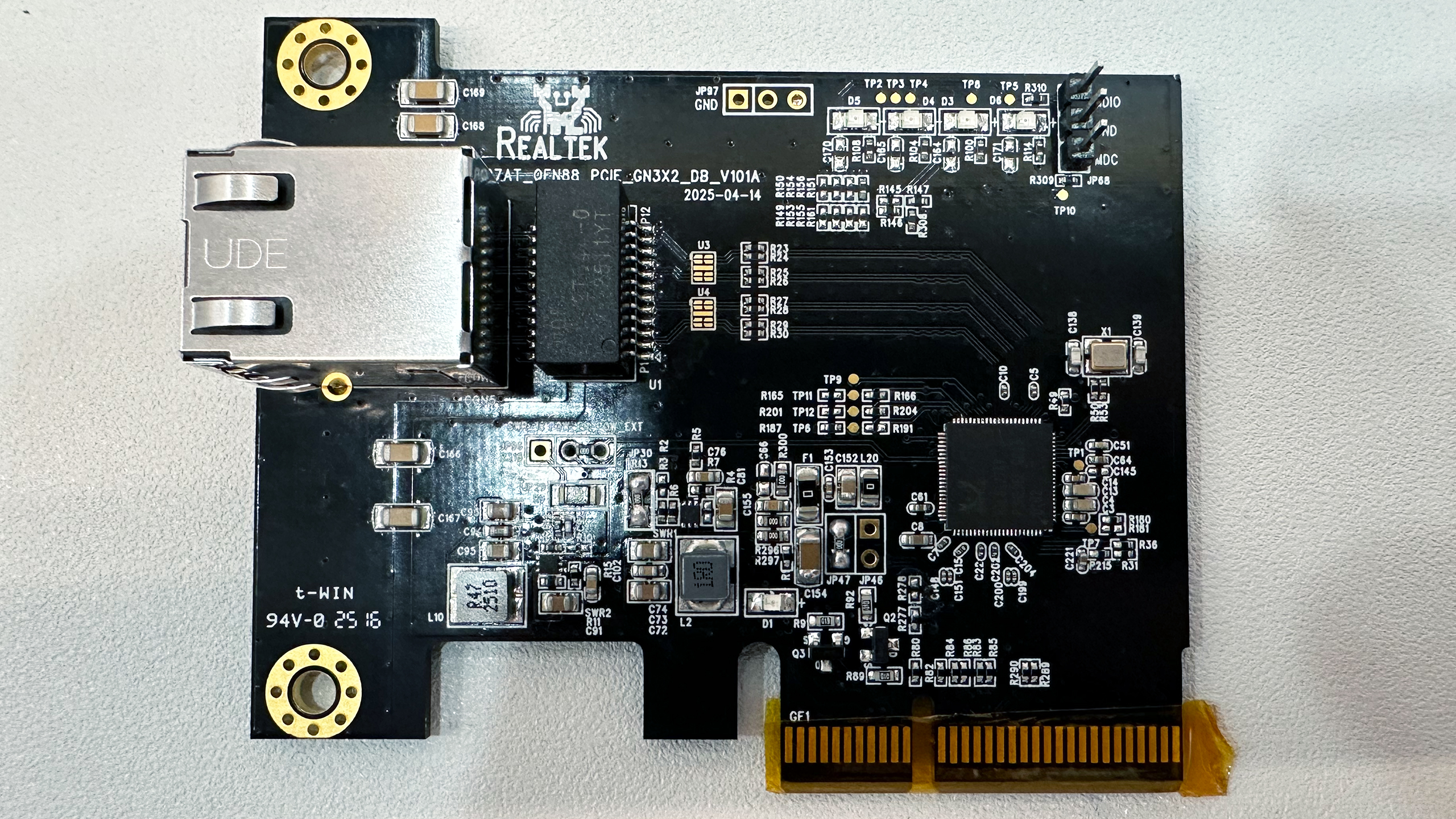

The infrastructure underpinning AI and software development is evolving to catch up with user expectations for speed and efficiency. Realtek’s announcement of a $10–$15 10GbE NIC (RTL8127) promises to bring high-speed networking to mainstream motherboards, reducing a key bottleneck for local AI clusters and high-throughput data tasks. The RTL8127 is compact (9mm x 9mm), supports multiple speeds, and consumes less than 2W—an important consideration for energy-conscious setups (more: url).

On the software side, the Python ecosystem is getting a major speed boost with tools like uv—a Rust-based package and project manager that claims to be 10–100× faster than pip, and offers a unified interface for dependency management, virtual environments, and project scripting. For developers managing complex AI stacks, faster and more reliable tooling like uv can translate into significant productivity gains (more: url).

For macOS users, RsyncUI wraps the venerable rsync command-line tool in a SwiftUI interface, simplifying the process of setting up and monitoring file synchronization tasks. While not AI-specific, such user-friendly wrappers lower the barrier for non-experts to adopt best practices in data management—crucial for anyone running local LLMs with large datasets (more: url).

The theoretical underpinnings of machine learning continue to inform practical advances. A recent paper on sparse spiked matrix estimation rigorously proves the existence of sharp 0–1 phase transitions in the minimum mean-square-error for certain high-dimensional estimation problems. When the sparsity and signal strength of the underlying “spike” scale appropriately, the mutual information exhibits a sudden jump—mirroring phase transitions observed in compressive sensing. Such results deepen the understanding of information-theoretic limits in high-dimensional inference and may inform future algorithmic design (more: url).

In robotics, new work on the 3D Variable Height Inverted Pendulum (VHIP) model generalizes classic balance and push recovery techniques to three dimensions, accounting for variable center of pressure and height. This enables more physically realistic modeling and control of humanoid robots, allowing for independent analysis of height and lateral stabilization strategies. Generalizing 2D feedback controllers and motion decomposition to 3D VHIP brings the field closer to robust, real-world dynamic balance in complex environments (more: url).